We’ve written before about the importance of designing reliable products. That’s all very well, but a common question we’re asked is how to do product reliability testing in order to assess and validate reliability?

In this post, I’ll take you through the entire process.

We’ll talk about what reliability is, how to make a product reliable, some examples where poor reliability caused brands a lot of trouble, how to create a reliability test plan and test cases, and more. The focus is mainly on small electronic and consumer products, however, the following concepts are mainly applicable to just about any type of product small or large.

The Author

Our head of New Product Development, Andrew Amirnovin, is an electrical and electronics engineer and is an ASQ-Certified Reliability Engineer.

He is our customers’ go-to resource when it comes to building reliability into the products we help develop. Before joining us he honed his craft over the decades at some of the world’s largest electronics companies such as Nokia, AT&T, LG, and GoPro.

At Agilian, he leads the New Product Development team, works closely with customers, and helps structure our processes.

Table of Contents

Click the links below to jump to each section or just keep reading…

- Introduction to quality and product reliability

- Why is reliability testing necessary?

- Some bad product reliability examples that hit the news

- How to make a product reliable?

- How do we prepare for the reliability testing of a product (test requirements)?

- Understanding what kind of test cases are appropriate for your product type

- How to create a reliability test plan?

- Testing equipment

- Doing the tests in the lab

- Reliability project management

- Data analysis and creating the reliability test report

- Using the data to improve the product in further builds

- How can you get help from Agilian..?

- Further resources

Introduction to quality and product reliability

Before we talk about reliability let’s define quality as they’re different but related. According to ASQ:

quality can have two meanings: 1) the characteristics of a product or service that bear on its ability to satisfy stated or implied needs; 2) a product or service free of deficiencies. According to Joseph Juran, quality means “fitness for use”; according to Philip Crosby, it means “conformance to requirements.”

So quality is basically how the product is designed and manufactured, but even so, it still may not be ‘reliable.’

So, what is product reliability?

According to American Society of Quality, again, they define reliability as:

The probability of a product performing its intended function under stated conditions without failure for a given period of time.

So what this really means is that a product must operate not only to the specification it was designed for today, but also for the next two or five years, or whatever lifespan it was designed to use this product for. That is what reliability really means in this context and what consumers are looking for when making a purchase.

Why is reliability testing necessary?

If you are designing a product that is not reliable enough you’re going to end up with massive product returns and, possibly, rework and repair on all those returns. In some cases where reliability failure leads to safety issues, you might be forced to do a product recall.

All this is costly because you might have to hire additional staff to inspect and repair/rework the products. Your customers will also be dissatisfied and give you a lot of complaints resulting in a tarnished brand name, loss of sales and revenues, high warranty costs, and more. It’s hard to deal with a lot of negative reviews online and, in the case of customer injury or harm, you might even have some legal actions against you and damages to pay.

A lot of companies have gone out of business because they designed a ‘bad product’ that was unreliable. The reverse? You don’t need to deal with any of those!

Some bad product reliability examples that hit the news

If we don’t do reliability testing another thing that can happen is catastrophic reliability failures that we have seen in the past in some high-profile cases:

Samsung Galaxy Note 7

Image credit: Samsung

If you recall what happened to the Samsung Galaxy Note 7 in around 2016, the batteries were basically spontaneously exploding if the device was dropped or bent or even for no reason. The battery would overheat and explode putting consumers in harm’s way, and it got to the point where people with Samsung phones couldn’t even board airplanes!

Samsung lost over US$5.3bn, at least this is the figure mentioned in the press. In reality, they lost a lot more in sales and their brand has been seriously tarnished to this day even though Samsung phones are still very popular. Interestingly, Samsung has just discontinued the use of the ‘Galaxy Note’ name in 2022 and similar phones will now be named the ‘Galaxy Ultra.’ Evidence of the reputation damage that the Note 7 caused? Quite possibly.

Boeing 737 Max

Pieces of the wreckage of an Ethiopia Airlines Boeing 737 Max 8 aircraft. (Stringer/EPA-EFE/Shutterstock)

Image source: Washington Post

A really tragic and catastrophic reliability failure is Boeing’s 737 Max airplanes that were just dropping from the sky in 2018 and 2019. In two separate crashes, 346 passengers died. They finally found out that the failure was faulty sensors and software (the Maneuvering Characteristics Augmentation System) and were trying to implement a fix when the crashes occurred. Many have questioned why the jets were allowed to continue flying and, adding to Boeing’s reputational damage, a well-received Netflix documentary about the disaster, ‘Downfall,’ came out in 2022.

Boeing lost a huge amount of production for many years which slashed their profits and, of course, they had to deal with numerous lawsuits from angry relatives as well as paying a US$2.5bn settlement in January with the US Department of Justice in fines and compensation.

How to make a product reliable?

There are a number of ways to make a product reliable, but the most important is to test it during the product development phase.

Typically you want to start with 3 elements:

- Good components

- Good design

- Good testing plan

Testing will help you to understand what’s wrong with the product early in the design and development and then fix it as you go along, as you don’t want to wait until the product is finished and then do reliability testing. In that case, it may be too late for a redesign or a fix or will be costly and cause delays to do the work.

You also need to understand your customers’ requirements (such as how durable the product might need to be if it’s used outside) and where and how the customer is going to use this product. A person who is using, for example, a product in Hawaii where the weather is really nice and warm will be in a different environment to, say, Alaska where it’s really cold most of the time. So in order to be reliable, this product you’re making should be durable and reliable in order to be able to be used in conditions at different extremes, cold and hot and humid.

So, before you do the actual product design you need to understand your product use case environments as shown above. You also need to consider product storage requirements and be mindful of shipping conditions that the product will endure in transit. Requirements, environment, and shipping and storage considerations will help you create the right reliability test plan and then eventually end up finding any issues early in the development. You subsequently fix the issues as you go through the next prototype build and so on until you’re ready for mass production. In consumer electronics, this keeps going in the preparation steps (EVT, DVT, PVT) before mass production.

How do we prepare for the reliability testing of a product (test requirements)?

Let’s focus now on how to create a test plan and test cases and be able to actually start doing reliability testing.

If you are in the company environment you really need to make sure you work closely with the design team, R&D and manufacturing teams, testing team, etc, so that everyone is clear and on the same page with the reliability testing you’re going to be doing. You don’t want to request a large number of samples (prototypes for testing) and then end up having none. You also don’t want to do time-consuming planning for a lot of tests that numerous colleagues might object to, so the best thing to do is create a draft of reliability test cases that are applicable to this product and that you have agreement from all stakeholders, and then add those test cases together to create a reliability test plan and then make sure all the testing requirements are ready to actually do them.

Prototype samples for testing

Oftentimes, especially at the early prototype stage, companies don’t have enough samples or the budget to break those samples during tests and produce more. However, the reliability and quality teams really need those samples for testing, so there’s often a slight push and pull between them and the teams developing the product and producing prototypes. Reliability may request ‘X-number’ of samples and will be unable to do their job if they can’t break them.

When it comes to reliability and quality this is a key instance where you need management support to make enough samples so that everyone within the company, particularly reliability and quality, have enough samples to do their jobs properly.

The number of samples you need depends on what stage of product development you are at. When you are in the early stages of product development you don’t necessarily need a huge number of samples for testing because you know that even a small set of samples are going to fail. The reason is that the product is fresh and new, so this is normal. Really at this point, the product R&D team wants you to tell them is what the most commonly occurring failure modes are so they can fix those. Almost 80 per cent of the product is going to have failures, so just focus on the most serious failure modes here.

Once you move from the first to the second work-alike prototype build, otherwise known as moving from EVT to DVT, you need to increase your sample sizes for several reasons. If you don’t have enough samples it’s possible that you may not catch all the failures you’re looking for, so let’s say in the first build you used 20 samples, there’s a good chance that in the second build you’ll need 30 or even 40 to be able to catch any serious issues. In this way, as the product design matures you will end up with fewer and fewer failures, but if you keep going towards production with a sample size that’s too small you may not see those failures.

How to calculate how many samples you need?

It all really depends on your product type. For small products like mobile phones, tablets, or small consumer products you really don’t necessarily need to go statistical to define sample numbers required. You could just test anywhere from 5 to 20 samples for each test case and you should be fine catching the problems. However, to work out the minimum statistical sample size then you should be able to easily find calculators online that show you how to do minimum statistical samples. Here’s an example calculator.

Typically, 33 samples for a test would approximately give you a CPK (process capability index) of up to 1.33 and that would be sufficient enough to provide pretty good reliability statistical results. Remember, that’s 33 samples for just one product test (and several different ones may be performed), demonstrating how many samples might be required especially as you get closer to production.

For test cases that are critical to the quality of the design, manufacturing, or use case environment then it is wise to go with slightly higher sample sizes, but for some test cases that are not as critical if a failure happens a smaller sample size may be OK, but you’ll still need to have at least a minimum statistical sample size between 3 and 33.

How to reuse samples?

One very important function that a reliability engineer must keep in mind and typically experienced reliability engineers notice is that some of the samples can be reused in more than one test. For example, let’s say you did a temperature & humidity test on a product and they all passed. Can you do a button press or other tests in order to gather even more data on that same product sample? Probably, yes. So, depending on the experience of the reliability engineer what they are really looking for is to get more data out of this one sample and they can create a test “waterfall”.

In the waterfall test process, you take one sample, for example doing a high-temperature test on it then the same sample goes through a low-temperature test, drop test, etc. You will be able to see when it really breaks each time, but maybe not why. By using an aged product sample sometimes you are actually contributing to the sample’s failure due to the gruelling set of tests it’s forced to endure, and the engineer won’t even know which test caused the failure.

A better waterfall approach could be to run the sample through the high-temperature test and then only do some other subsequent tests that have nothing to do with the temperature test. That’s likely to be okay and return accurate results for each test. So it’s very important that you have some kind of logic built into the test plan so that once there is a failure engineering can follow that logic and understand what the root cause of the failure was so they can fix it.

Understanding what kind of test cases are appropriate for your product type

It’s very important to understand what kind of tests are necessary for your product. Sometimes you can find those on standardized tests such as those published by ASTM, IEC, and a number of others. You can decide which one of those test cases are appropriate for your product. However, there are times when you have a product where you have to create a custom test, for example, if you want to do a drop test but your product must be particularly durable and resistant to being dropped from two meters. That’s a custom drop test based on the requirements you want to meet, not those already outlined in a standard test.

Let’s say, for example, that you are trying to create a new type of keyboard. You have to think about the end-use case/s, such as if the end-user is going to use it just like a typewriter at the office for just a few hours a day or if it is going to be used by a gamer who’s going to sit and bang on the keys for many hours at a time. So in a product like this where there is a low side use case and then there’s a high end-use case you have to keep both of those in mind.

Are most of your customers going to be gamers or just office users? If it’s office users you may not need to test the keyboard as strictly because the cost to make them more reliable may not be worthwhile if they aren’t used enough in normal conditions to break easily. But if you want to be the leader in gaming keyboards it’s a totally different story. You’d better make it a lot more reliable so that it is going to last and take a lot of punishment and the gamers will give you great reviews (they’re more likely to be active online and reviewing products and services in all likelihood).

Having considered how the product is going to be used and what it needs to achieve like this, you can start to formulate appropriate test cases. Take a high-end user like a gamer who’s going to be playing eight hours a day for 365 days of the year. You want the keyboard to last for at least two years. In general, a good rule is to multiply by two the minimum that a user is going to be using a product in whatever way, in this case, how many keystrokes they’ll make. It could be several hundred thousand over two years so you will probably want over a million keystrokes as the minimum amount that a high-end user is going to be using their keyboards in order to have a reliable margin of reliability. This way even if they happen to use 100,000 more keystrokes than estimated, you’ll be pretty certain that your product is not going to break within that time period.

How to create a reliability test plan?

In order to create your test plan, you need to gather all the test cases you have created, either standard ones that are already available in the industry or customized ones (sometimes you may be using part of a certain standard only as a guideline and modifying it, which is fine) and put them all together in a spreadsheet. This is your test plan and it should also at least include the following:

- Names of the test cases

- The standard/s that is going to be used (if applicable)

- The conditions and test procedure per test case, for example, for a high-temperature test, define what temperature the oven needs to be set to and for what length of time the product sample needs to be tested in the conditions

- What test equipment is to be used (such as oven type)

- How many samples are needed

- What the criteria are for a pass and fail

- Any comments you may have about the minimum requirements

Once ready, take the test plan and review it with your engineering, manufacturing, and test teams. The key is to make sure that everyone is on board and okay with all the test cases, and that you didn’t miss any environments and/or use cases that need to be tested.

Testing equipment

There are a couple of ways to do product reliability testing, either in-house with your own testing equipment or in a third-party test lab. Each has its own pros and cons and you have to decide which one is best for your needs.

In-house lab

If you have an in-house test lab, of course, you need to do maintenance on the equipment (including regular verifications or re-calibrations) and you need staff to run the tests. That’s an overhead for the business, but when you do a lot of tests it becomes very cost-effective to do them in-house.

If you use standardized tests the equipment required is commonly available in the industry like a high-temperature oven, low-temperature oven, humidity oven, HALT chamber, drop tester, etc. Yes, they require investment, but they’re common so in many cases it is relatively small.

However, once in a while, you run into a certain test case where you must create your own test equipment. In this case, you need to have machinists and mechanical engineers who understand your vision and know the requirements exactly to help you develop and build that kind of test equipment. That’s going to be a much larger investment.

Outsourced testing

If you don’t utilize tests a lot and you’re only testing once in a while then it’s best to outsource it to a third-party test lab because they have all of the equipment already and also (in some cases) the technical staff who know what to do and all you do is pay for the cost of the testing.

A third party lab is a good option if new tests need to be created. You would still need to pay for their work, but ideally they have the in-house expertise that many companies just don’t have. If you have built your own custom test equipment, you can send it to them to perform the tests rather than pay them to make it, too.

Doing the tests in the lab

Now that you’re ready to do the product reliability testing the most important requirements are that you must have people trained to do it and that you must have your test procedures ready for them before they begin.

How does this test need to be run? What equipment is needed? Who is going to perform the test/s? On how many samples?

You’ll need to answer these questions and gather all the testing team together for training them. Also, make sure that the equipment is in place and is properly calibrated. If purchasing new equipment, give yourself a two, three, or four-week buffer for it to arrive ahead of the testing date to give yourself time to receive it, set it up, and calibrate it. If working with an outside test lab, reserve them and get the cost approved in advance for the same reason.

Reliability project management

Being a reliability engineer for a number of different products could be very time-consuming indeed because you have to be thinking about all of the points we’ve looked at so far and then you have to have a good program manager be able to program and schedule all the tests, obtain the results, and so on. Here are some basics that need to be managed when you’re testing for reliability:

- Prep samples.

Prepare your samples in advance and assure that they’re serialized (one through however many you have) - Datasheet.

Have your datasheet ready for use and then for each sample being tested the staff member will read and complete it. It will clarify to everyone what they are testing and the test parameters, also the frequency (such as the number of the drops in a drop test), the test equipment used, what’s going to be tested for how many periods, how long the test is going to take, and its rough costs.

Data analysis and creating the reliability test report

Once the testing is done and the datasheet is complete, the egineer analyzes the data and produces a test report.

Data analysis

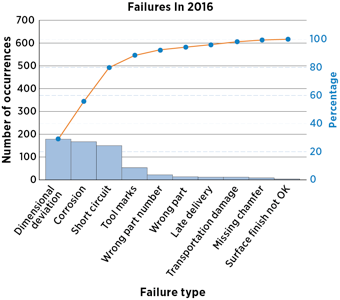

One of the simplest and most relevant to reliability methods is the ‘Pareto analysis.’ Here you put all the failures into a Pareto chart and determine which are the highest number of failure modes and which are the fewest. The Pareto chart will show you the top 20 per cent of the failures that are causing 80 per cent of the problems (80/20 rule). Here’s an example Pareto chart:

Image source: Qrius

You can see that the top failures are dimensional deviation, corrosion, and short circuits. Yes, there are more than three other failures, but they don’t cause as many problems as the minority who between themselves cause more than 80% of the occurrences. So if you focus on fixing just these 3 issues then you have fixed 80% of the failures causing the prototypes to be defective.

Following the Pareto analysis, you have results and are ready to do failure analysis. After failures are found, keep the offending samples and make sure the mechanical and other engineers are aware of the failure by having a meeting together to describe the failure mode you found and some of those mechanical engineers, reliability engineers, electrical engineers, and other design engineers will do failure analysis on the physical samples. So many teams are involved so nothing slips through the net and we can be certain that the product going into production is reliable.

Creating the test report

A good test report should include the following:

- All the failure modes identified

- Images of identified test cases that failed

- A detailed description and photo of the failures including the conditions under which they failed (e.g. ‘Sample A’ broke on the 3rd of 10 drops, whereas ‘Sample B’ broke after 10)

Next, review the completed report with the team and obtain their feedback.

Using the data to improve the product in further builds

After the round of testing is complete and the data has been analyzed, one of the most important things a reliability engineer must do is to identify through parallel analysis and checking the Pareto failure modes what must be fixed in the next build so you have a much-improved product. Then in the next build, you should not encounter these issues and you will then see what, if any, other issues are occurring (there should be fewer this time). Those are also fixed, and so on and so forth until you have an approved sample that’s ready for mass production.

It is critical not to change your testing procedure, test plan, or test methodology between builds.

If you change it from one build to the next then engineers won’t be able to keep track of why a test was passed in the first round of testing, but now it’s failing in the second round. By maintaining consistency, you’re able to narrow down exactly where new failures occur between builds because if you’ve done a good job and you didn’t change anything then what passed initially should still be passing.

Reliability growth modelling

This is when you’re improving the product build to build and then, once you’re nearing high volume production, you don’t do any more component or engineering tests, rather stripping the reliability test plan down to only product level testing in what’s known as a final reliability test before mass production starts. This must pass, otherwise, you will encounter issues in the field once the products are in consumers’ hands which is bad news as Samsung and Boeing will tell you.

How can you get help from Agilian..?

At Agilian, we provide reliability engineering services to our customers who don’t have testing labs or experienced personnel to handle the testing.

We provide 2 options: standard OR customized testing (for more reliability or durability) – customized would be more relevant to, say, medical or military devices ‘Hi-Rel’ products that must be more reliable. On consumer products, our focus is usually failure mode testing and component level testing.

In our in-house testing lab, we have numerous pieces of equipment used for many product reliability tests required for electro-mechanical products and software to do MTBF testing and life analysis on the product. After this, we can also develop and build your prototype and start testing it.

You can also learn more about how we focus on product quality and reliability.

Further resources you might like about product reliability

These blog posts and podcast episodes are related to product reliability testing and development and manufacturing of products that will last:

- Why Do Importers Need Product Reliability Testing? [Podcast]

- How Bad Product Design Leads to Many Quality Issues

- How Reliability Testing Is Critical To Obtaining Great Mass-Produced Products

- Why Product Reliability Testing Is A MUST During Product Design [Podcast]

- Why The Most Common Reliability And Safety Testing Standards Are Limited

- How Many Product Samples Are Required For Reliability & Compliance Testing?

- Life Test To Determine Product Reliability

- HALT Testing (Highly Accelerated Life Test)

- MTBF Confirmation Testing (Mean Time Between Failures)

- How Can Poor Quality & Reliability Products Affect Your Business? [Podcast]

- How To Drive Your Chinese Suppliers To Improve Reliability

- Do You Need a Customized Reliability Test Plan?